Excuse me, sorry to interrupt...

Interruptions are a software developers worst nightmare. This is what you often hear. There is nothing worse than being interrupted while working hard on a problem, while you’re in the zone. You’ll lose your train of thought and the world collapses.

The idea is that in software development you are sometimes very deep into a problem, analysing code:

This orchestrator calls that service, it is managed by this class and talks to that queue. So this generator has these parameters and it all depends on… “hey I still need your time sheet”

Poof… everything gone....

JavaOne 2016, the Future of Java EE

Lately there has been a lot of rumor going around about the future of Java EE. Oracle ex-employees Reza Rahman was one of the first to voice their concern about Java EE. It seemed that all development on the seperate JSRs (Java Specification Requests) that make up Java EE 8 ground to a halt and Oracle was thinking about stopping Java EE development all together.

Oracle finally gave some insight on their proposal of the future of Java EE during JavaOne 2016 (where I am right now).

What is Java EE?

First, lets take a step back...

Job titles when writing code

The company I work for (JPoint) we don’t have work titles. Well, we do, but you’re free to pick one. Some people call themselfs ‘software developer’, some are having more luck as ‘software architect’, others label themselfs as ‘software craftsman’ and there might be a ‘software ninja/rockstar’ hanging around.

But I have a problem with that… all those terms don’t reflect on what we do. Currently I’m sitting in a session at JavaOne and I’m having the feeling people don’t realize what their job actually is.

#So, what does a software coder do?#

When creating software we instruct...

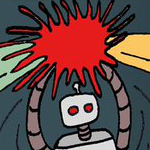

Saving the world with a code review

This morning I noticed the following tweet by fellow programmer (and runner) Arun Gupta:

Why code reviews are important? pic.twitter.com/8KyMo7Syis

— Arun Gupta (@arungupta) August 28, 2016

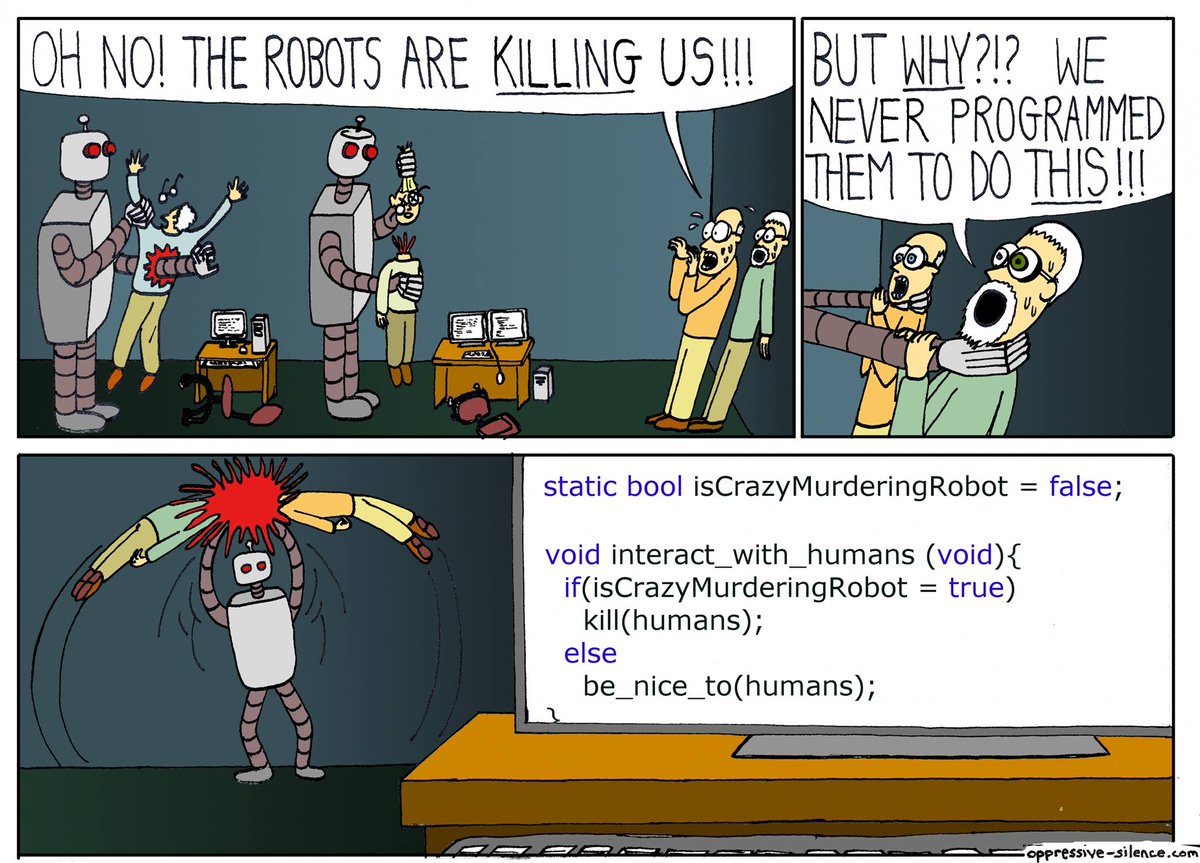

The tweet contained the following cartoon by ‘Oppressive Silence’, check out their website for more laughs!

Solved by a code review?

The main question I’d like to ask:

Is this really something that you’ll find during a code review?

I think my answer below won’t surprise you, but...

Gender (in-)equality: my honest mistake

Gender equality and discrimination in IT is a hot topic. More and more women are (rightfully) opening their mouth and point out problem areas. There are just some men who just don’t get it, those insensitive idiots, they are the reason IT is such a toxic harsh environment for women.

So I thought…

This is a story about when I realized I am (unintentionally) one of those idiots.

Joy of Coding

This friday I spoke at Joy of Coding in Rotterdam. An awesome conference and a real example for other conferences regarding gender equality. The main organizer is...

Speaking at Joy of Coding 2016

Most conferences I get to visit are about Java. Java frameworks, techniques, other JVM languages, all of them have pretty much the same focus. However: there is one conference on my agenda that is… different (in a good way!). Joy of Coding is all about (you’ve guessed it) the joy we experience while coding. The topics are very broad, some talks are technical, others are not.

Talking about game theory

At this edition of the Rotterdam-based conference I was invited to talk about game theory and game algorithms, and I explained this by going from a simple game,...

Speaking at Devoxx UK 2016

Last week I went to London with my colleague Bert Jan Schrijver for the Devoxx UK 2016 conference. The UK-member of the international Devoxx conference family is smaller than its siblings. The event was three days, with the first two having talks and the last day was filled with hands-on-labs. During the first two days there were four parallel tracks giving the almost 1000 visitors enough content to pick from.

The quality of the speakers is very high in London. There are usually a lot of international speakers, probably...

How to become a software architect

Yesterday, on the vJUG mailing list, Gilberto Santos asked the following question:

I’m working as Software Engineer and as intend to grow up to architect position, now on I was wondering how to best way to get there , should be :

Java certifications

Masters

Both ?

I replied to him over the email, but it might be something more developers are struggling with, time for a blog post!

Architects should code

There are two types of software architect:

- The one that draws Visio® diagrams

- The one that develops/codes

The first type of architect...

The Prettiest Code

This afternoon I started to wonder…

I’ve been a programmer now for 20+ years, but what is the best piece of code I’ve written in all these years?

The first thing that popped in my mind was this. It is a piece of code that can very quickly, in near linear time, generate de Bruijn sequences. I ‘invented’ it after reading a scientific paper that described how to quickly generate ‘Lyndon words’. I knew with Lyndon words you could easily generate the de Bruijn sequences. So I implemented it and adopted it for de...

Biometric passwords: No!

Please sit down, we need to have a talk, programmer to programmer.

Over the last decade we’ve had a lot of problems with authentication. For example, we’ve stored plain text passwords in the database. We’ve learned from this and nobody is doing this anymore right? If you are, please deposit your programming-license in the nearest trash can.

Latest challenge: Biometrics

It is time to talk about the latest problem in IT: biometric data.

Some websites are using biometrics, such as your fingerprint, as your password. This sounds great, very hard to fake, unique to you. But there is a...

Deprecating Optional.get()

On the OpenJDK core mailinglist (and Twitter) there is a discussion about Java’s Optional. Before diving into that discussion, lets take a look at what Optional does and how you can use it.

Checking for null

What do you do when your code calls an external service or god forbid a microservice, and the result isn’t always available?

Most of the time the protocol you are using facilitates in the optional part, for example in REST you’ll get a 404 instead of JSON. Getting this 404 forces you to think about this scenario and do something when this...

The collapse of Java EE?

Recently there has been a lot of discussion about the state of Java EE and Oracle’s stewardship. There seems to be happening a lot. There is the fact that a lot of evangelist are leaving Oracle. There have been (Twitter) ‘fights’ between developers from Pivotal and Reza Rahman. And there are the Java EE Guardians, a group formed by Reza after he left Oracle.

And during the last JCP ‘Executive Committee Meeting Minutes’ the London Java Community (LJC) openly expressed their worries:

Martijn said that while he recognizes Oracle’s absolute right to pursue a product strategy and allocate...

Flipping the diamond - JEP 286

After my blogpost yesterday Pros and cons of JEP 286 I’ve received a lot of feedback.

The more I’ve been thinking about var/val it seems that my biggest mental hurdle is the Java 7 diamond operator. The diamond operator is good, it eliminates typing, and I like it… but I have the feeling it could be so much better!

Instead of (or in addition to) adding var and val I’d love to see a solution where we could ‘flip’ the side of the diamond operator.

JavaMail API: Message in EML format

Our application was already using JavaMail (javax.mail.*) as a way to inform our users. But for logging purposes we wanted to store all the emails we send in our database (and make them downloadable using our GUI).

It turns out this is pretty easy to do!

Let’s start with some very basic email code we already had in place:

// Some method to construct a MimeMessage: Message message = createMailMessage(input); Transport.send(message);What we need to do now is to ‘render’ the entire...

Pros and cons of JEP 286

A couple of weeks ago a new JDK Enhancement Proposal (JEP) has been published: JEP 286

It proposes ‘var’ and possible also ‘val’ as a way to declare local variables. This means that for local variables you don’t need to specify the type of your variable when it can be safely infered.

(

(